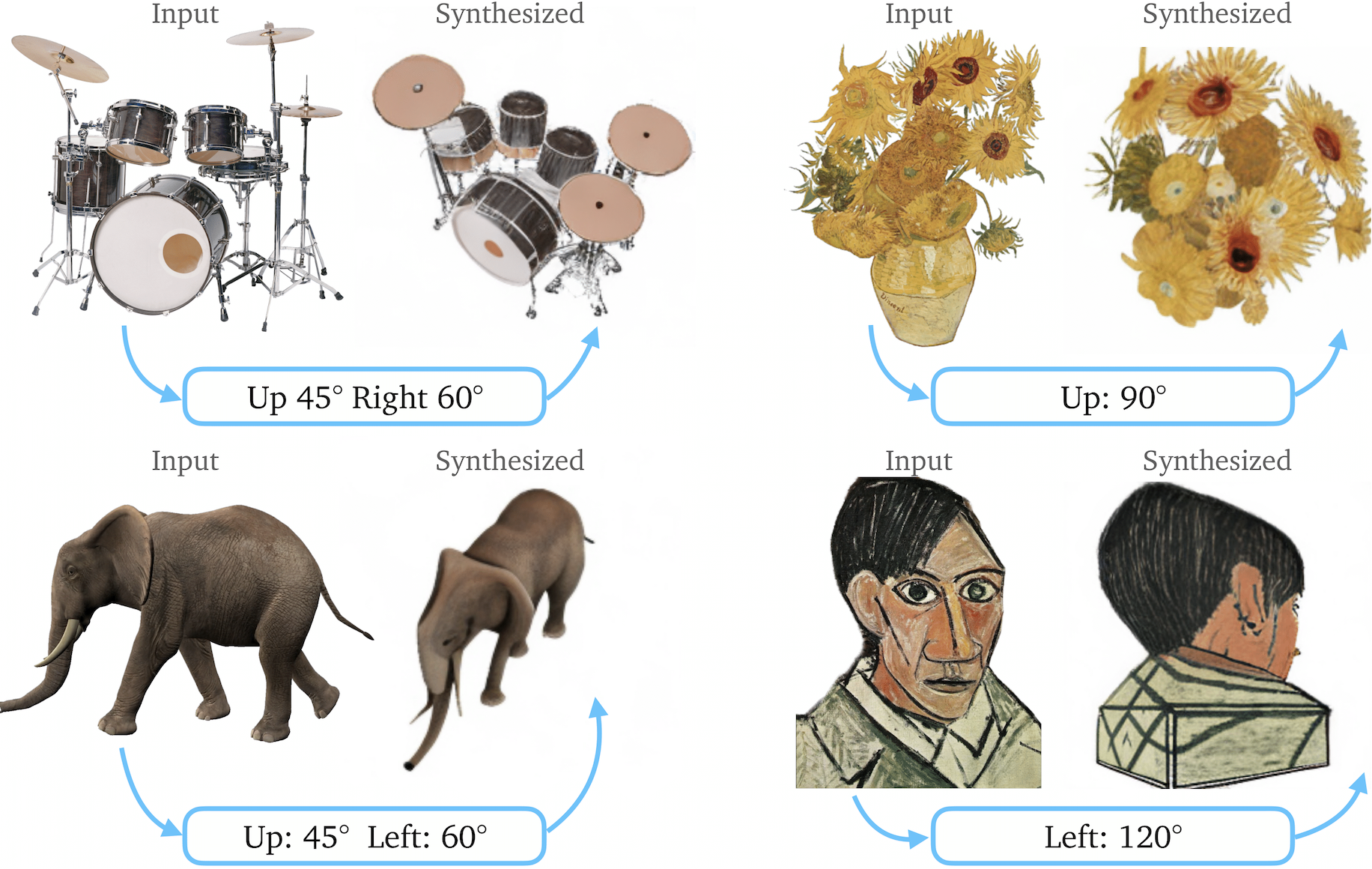

Zero-1-to-3: Zero-shot One Image to 3D Object

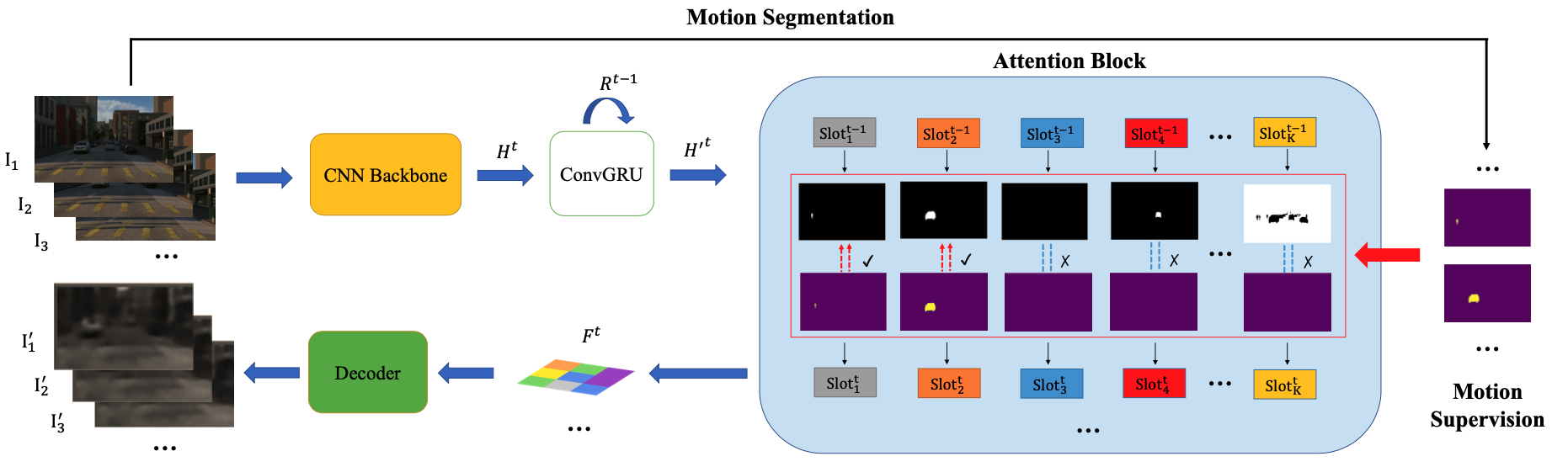

We introduce Zero-1-to-3, a framework for changing the camera viewpoint of an object given just a single RGB image. To perform novel view synthesis in this under-constrained setting, we capitalize on the geometric priors that large-scale diffusion models learn about natural images. Our viewpoint-conditioned diffusion approach can further be used for the task of 3D reconstruction from a single image.